The Evolution of Software Security Assurance. Part 2

Code Review

Now my favorite probably – code review, as I said, I got into software security from a code review static analysis standpoint. The origins of what we do in code review today, really, come from formal methods and verification processes. One of the most seminal works in this area was a document called the “Orange Book Standard”, also known as the “Trusted Computer System Evaluation Criteria” published by the DoD in 1983 and revised multiple times since then. The idea is you can inspect software at a source code level without executing the software, without having to put it in all the various states that you want to test. And the benefit of this is that you get completed consistence coverage across the entire codebase. It also gives you the root cause of the problem, so as opposed to pointing out a URL or a parameter that might be vulnerable, using code review you can point a developer to the line of code where the vulnerability actually exists and where they need to implement a solution.

Early static analysis tools got a bad reputation when it came to helping developers with code review, because in the early days – we’re talking around 2000-2001, tools like RATS, ITS4, Flawfinder – these weren’t really bug-finding tools. They were, in a way, glorified grep. They could collect a long list of functions that might lead to security vulnerabilities, and then they could analyze an entire program for every instance of those functions or methods that might lead to a vulnerability. The problem is they were unable to tell which ones were really exploitable and which ones weren’t, and because of that they created a lot of false positives and a lot of work for developers.

One of the biggest differences in modern static analysis tools and one of the biggest benefits that static analysis can bring to security process today is the ability to prioritize those exploitable vulnerabilities from the lower priority, less exploitable vulnerabilities.

Architecture of a static analysis engine

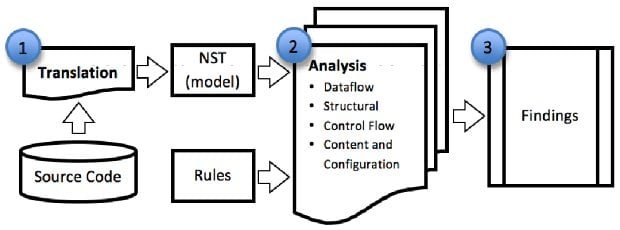

To give you an idea of how a static analysis tool works and what the architecture is, upfront we have a phase called “translation”. In translation we parse a bunch of source code, build a model of the application, and then on that model we perform our subsequent types of analysis. Most modern static analysis tools implement more than one kind of analysis, because different kinds of analysis are suited to find different kinds of vulnerabilities. In particular, things like dataflow analysis are very good at finding exploitable vulnerabilities and differentiating those from ones that might only be a bad practice or kind of bad code hygiene.

Eventually, once the analysis is complete – and as an input to that you’ll notice on the diagram there’s a set of rules, or a knowledgebase of security principles, security problems that we want to look for – we present findings to the user, and I think this is one of the most important stages in the review process because this is where the human gets to decide: “What’s important to our business? What’s a real vulnerability? What isn’t? Which ones do we need to prioritize for remediation?” And this is where the security of the software actually gets improved.

So, why is static analysis good for security? It’s very fast compared to manual code review. Anyone who has reviewed code by hand understands that they can probably get through a few hundred, maybe 400-500 lines of code before they are done for the day, exhausted, their eyes are glazed over. Static analysis, on the other hand, is able to hypothesize an infinite number of states that the software might be in, and completely and consistently check those for security vulnerabilities every time the analysis is performed. In addition, it’s fast compared to runtime testing techniques because of the ability to hypothesize, or simulate, states that the software might be in. It’s able to radically reduce the time it takes the tester to consider all of those possible states.

For developers and other people who aren’t security experts, aren’t part of the security team, one of the most important things that static analysis can provide is bringing security knowledge along with it. So, as opposed to just pointing out potential problems, it can also bring description of those problems and advice on how to correct them along with it, and provide those in a contextual way to the developer when he or she needs them.

As I mentioned and as I’ll continue to mention, the different techniques I talk about today feed into each other really nicely, and static analysis is no exception. In particular, static analysis can give a whitebox perspective to penetration testing activities otherwise those have a blackbox perspective, looking at the application from the outside. But with the benefit of static analysis they can see the implementation, the configuration files and so on, and gain insight into what the application is actually doing before they attack it.

Penetration Testing

So, let’s talk about penetration testing. The origins here are kind of twofold. There’s one camp that came from the Department of Defense and some funding from IBM back in those early software days, called “tiger teams”. And tiger teams were all about looking at a deployed system, a collection of hardware and software, and deciding whether it was safe, whether it was good. They tried to beat on it as hard as they could to find security vulnerabilities, but it was within the context of one organization, usually internally focused.

In 1993 Dan Farmer wrote a paper, released a tool called SATAN, and was subsequently fired for the combination of those two that began to say: “Okay, we shouldn’t just beat on our own systems when they are complete; we should think about the components that go into those systems – the individual software applications, both that we develop internally or that we acquire as off-the-shelf software, and execute the repertoire of attacks that we know those systems will face when they are actually deployed in the wild.” And SATAN was the first such automated penetration testing tool that collected these attacks that systems would face in the wild and mounted them against internal systems or systems that an organization was trying to determine the security of.

There are a lot of automated tools today in this space; penetration testing has been heavily automated. Some of those do a better job than others, but all of them are quite effective at finding certain classes of vulnerabilities, and we’ll talk more about those in a moment.

A typical penetration test has three primary phases, and these are often repeated again and again. First, the penetration tester, whether it’s a human or a tool, will crawl the application, try to identify all the pages, all the URLs, all the parameters that the application exposes to the user. Then, for each one of those pages, the tool or the human tester executes a number of attacks, and these attacks are based on attacks that we’ve seen in the wild, they are based on attacks that we see being effective against certain kinds of vulnerable coding constructs that we have identified from the static analysis perspective for example. But whatever they are, the collection of attacks get mounted, and then the third phase is looking for responses from those attacks, looking for which ones were effective, which ones might have triggered an exception to be raised, or some sensitive information to be leaked out of the site. Whichever attacks are effective, those are the ones that lead us to report a vulnerability, lead us to report a weakness or a flaw of the system.

One of the big challenges of penetration testing is that it’s hard for it to do a comprehensive job, it’s hard for it to test every page for every kind of vulnerability and ensure that it’s followed every path through the code behind that application. The reality is, even with this challenge in coverage, penetration testing is highly effective at finding vulnerabilities in web applications today.

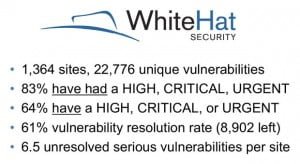

A company called WhiteHat Security does penetration service and they have released statistics that you see on the slide (see image), related to the likelihood that a given site, a given web application will contain at least one instance of a certain class of vulnerability. And you can see that with 66% effectiveness they are able to find cross-site scripting in web applications. Two thirds of web applications that they’ve looked at are vulnerable to cross-site scripting, and are vulnerable in the way that penetration testing can find, even with the limitations that we discussed.

I think penetration testing, of the three fields that I’ve talked about today, can benefit the most from combination with the other techniques. And in particular, static analysis and penetration testing have a really nice relationship because of the blackbox/whitebox aspect. Research that I’ve worked on in the last few years falls under a title I like to call “correlation”. The idea here is we want to perform our penetration test, perform our static analysis, and then bring the results together in some very meaningful way.

As an example, imagine we found a dynamic SQL injection vulnerability with our penetration test. With an existing static analysis we have already found that there’s a related SQL injection vulnerability, and another log forging vulnerability that we haven’t found with our penetration test. Because we can relate these, we can correlate the static and dynamic results, we can not only reprioritize the static SQL injection vulnerability and give the developers root cause remediation details about the line of code they need to fix, as opposed to just the URL that the pentest would have told them about; we can also say that the second static analysis result, the log forging issue, should be reprioritized at a higher level as well because it receives input from the same vulnerable parameter that the penetration test successfully used to exploit the SQL injection vulnerability. So in this case we are able to not only relate the same vulnerability found using static and dynamic analysis – static and penetration testing – we are also able to relate the dynamic vulnerability with other similar static analysis vulnerabilities. And this is where the real power of correlation comes in.

So, we have talked about these three disciplines of software security assurance: threat modeling, code review, and penetration testing. And we have seen that each of these has come from very different set of origins: one from secure design principles, one from verification and formal methods, and one from trying to break or attack running systems from the “tiger team” in early penetration testing days. These three disciplines, to be most effective moving forward, are going to begin to converge more and more. And they are going to converge under one of a couple of possible umbrellas.

Also Read:

Posted in: News

Leave a Comment (0) ↓